|

I am a first-year CS Ph.D. student at Cornell University working with Prof. Wei-Chiu Ma. Previously I got my CS M.S. degree at University of Pennsylvania, advised by Prof. Lingjie Liu. Before that, I have acquired my B.E. degree in CS with highest distinction from Nanyang Technological University, Singapore, where I had the great opportunity to work in MMLab@NTU, advised by Prof. Chen Change Loy and Prof. Bo Dai. During my undergrad, I also spent a great summer in 2022 visiting UCSD and working with Prof. Hao Su. |

|

|

My research focuses on high-quality 3D generation by leveraging abundant 2D data and the powerful priors of large foundation models through differentiable neural rendering. I am also dedicated to detailed human generation and reconstruction, with experience in real-time renderable human avatar reconstruction from video and generating 3D-aware, high-fidelity human faces. My objective is to develop models that maintain consistency and adhere to physical laws in 3D/4D spaces while learning from rich 2D data through differentiable rendering. |

|

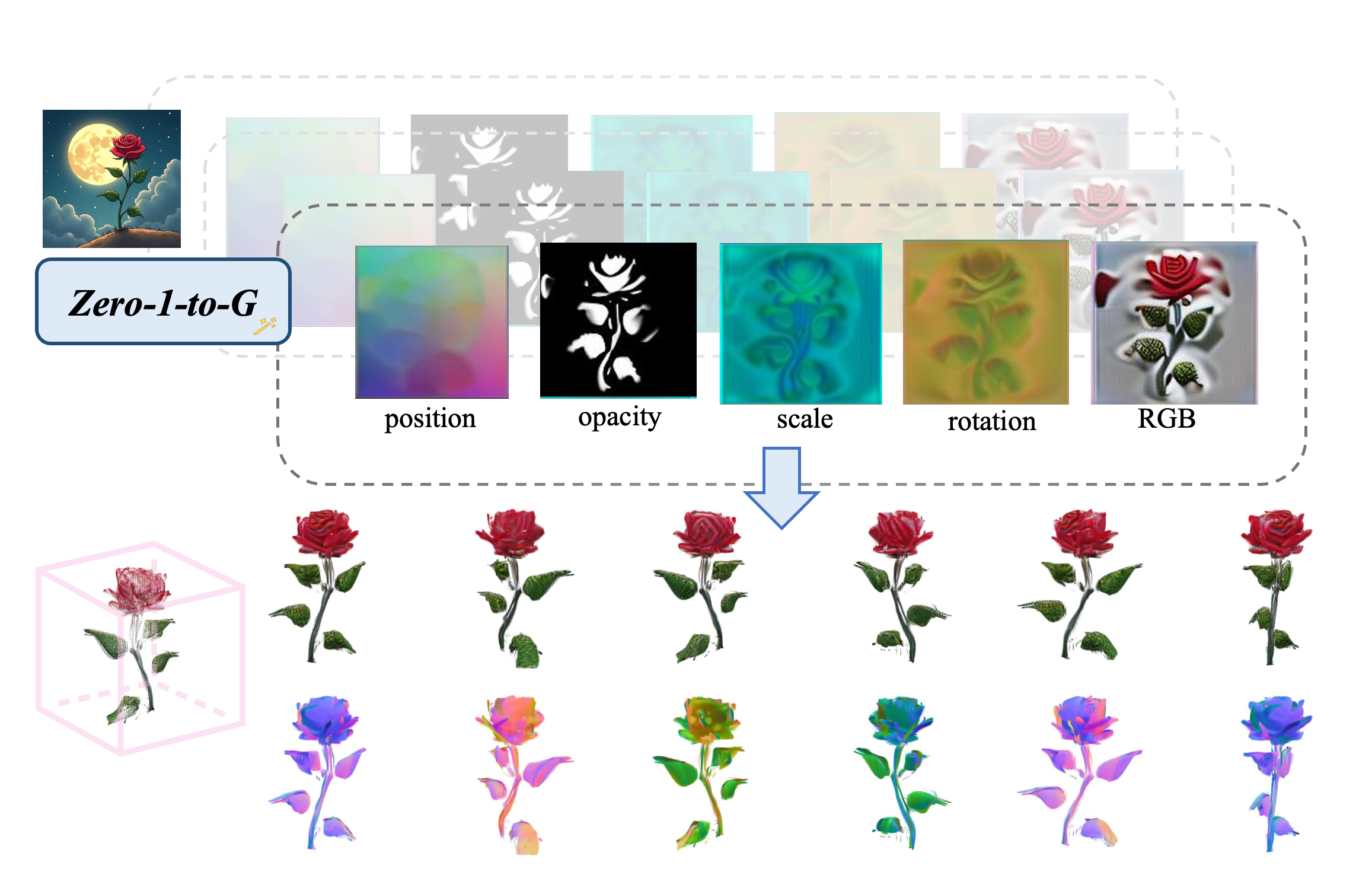

Xuyi Meng*, Chen Wang*, Jiahui Lei, Kostas Daniilidis, Jiatao Gu, Lingjie Liu TMLR 2025 project page / paper / Github (code coming soon) We reframe the challenging task of direct 3D generation within a 2D diffusion framework, by decomposing the splatter image into a set of attribute images. The use of pretrained 2D diffusion model enables high-quality 3D generation and good generalization ability to in-the-wild data. |

|

|

Yushi Lan, Fangzhou Hong, Shuai Yang, Shangchen Zhou, Xuyi Meng, Bo Dai, Xingang Pan, Chen Change Loy ECCV 2024 project page / paper / Github

LN3Diff creates high-quality 3D object mesh from text within 8 SECONDS. |

|

Yushi Lan, Xuyi Meng, Shuai Yang, Chen Change Loy, Bo Dai CVPR 2023 project page / paper / video / Github

We propose E3DGE, an encoder-based 3D GAN inversion framework that yields high-quality shape and texture reconstruction. |

|

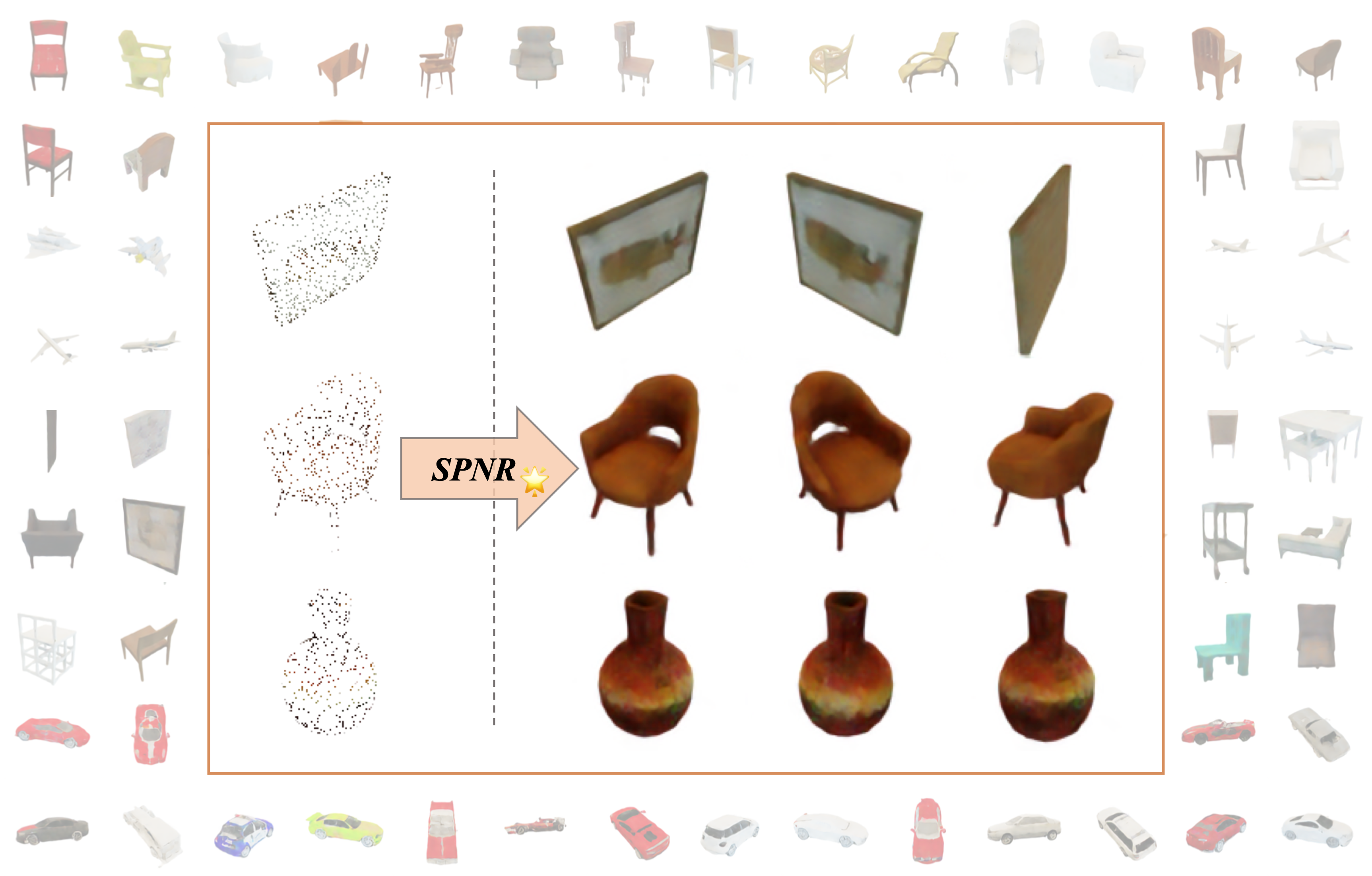

Xuyi Meng, Jialin Zhang, Fanbo Xiang, Jiayuan Gu, Xiaoshuai Zhang, Hao Su paper / supplementary We present SPNR, which encodes sparse point clouds into neural volume representations to produce images with high visual quality. Given sparsely sampled surface points, SPNR generates disentangled density and color volumes and utilizes volumetric rendering to produce viewconsistent high-quality images. |

TA for CIS7000: Neural Scene Representation and Neural Rendering , 2024 Fall

🎹 I play musical instruments: Piano and Guzheng. [Recordings here ]

💃 I performed traditional Chinese dance and Latin.

🌎 I can speak English, Mandarin, and French.

|

Thanks for the amazing source code from Jon Barron's website 🧚 |